Update

November 2021 Update: With technologies advancing, we started using React frameworks like Next.js, which solve this exact problem. At that given moment, we'd recommend you using Next.js for such cases.

The problem with Single Page Applications and SEO

We were dealing with a big Single Page Application written in React, using Redux and Saga.

The main goal was to index the public views in Google and add rich content support when you share a link on social media.

After a quick research about Google and SPAs, it turned out that if you are lucky enough you may get into the “real browser” craw queue. But Google is using a really old version of Chrome to render the pages. A version that we didn't support at all. And even if we added support for old Chrome we still needed a solution for the rich content problem.

Server-side rendering! The approach of running the same codebase on the server and on the client sounds great. In order to do that, we needed to change a significant part of our frontend codebase. Many of the third party packages that we were using didn't support server-side rendering and there were some challenges of getting the redux-saga to work on the server.

We realized that going from client-side rendering to server-side rendering is not going to be a task for a day or two. So we needed a quicker solution.

How prerender.io solved that problem

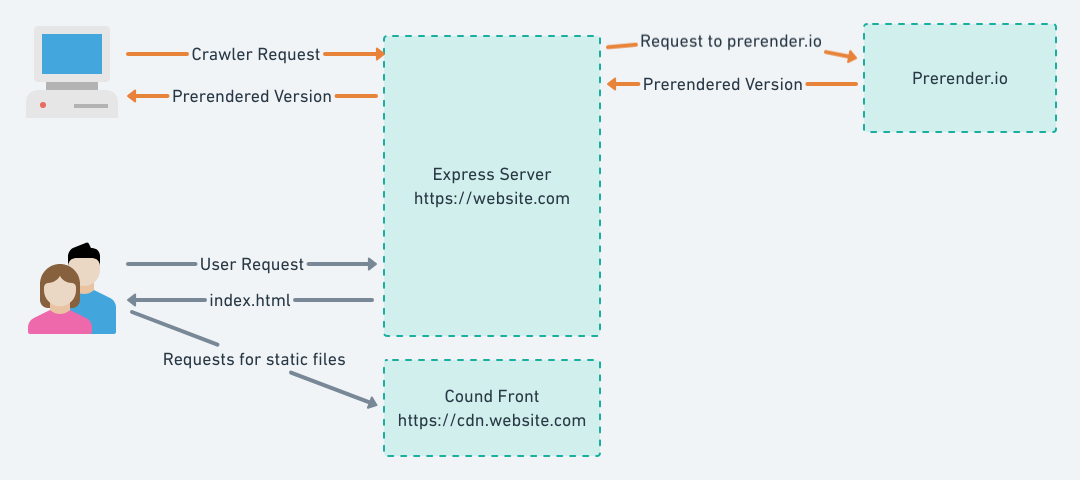

prerender.io scrapes your website on a regular basis using the latest Chrome. Then it stores all the rendered HTML pages into a database and gives you an API for that so you can access the rendered HTML for every URL of your website.

The only thing you need to do is to add a proxy that checks the user agent. If the user agent is a search engine or some kind of crawler (Facebook, Linkedin, etc.) you just send an API call, get the rendered HTML from prerender.io, and return it to the crawler. If the user agent is not a crawler you can just return the index.html of your SPA so the JS would kick in.

As simple as that! And the best part is that you don't need to write that proxy. Prerender.io has configs for all the common web servers. Apache, Nginx, HaProxy, Express, etc.

The actual setup

Requirements

We had a couple of requirements for the setup:

- Host the proxy on Heroku like the rest of the backend that we had.

- Use AWS CloudFront for serving static files (.js, .css, and images). You don't want to host static files on Heroku.

- Keep the environment variables in one place.

- Keep the code separated from the UI project. (Different repository)

Implementation

You can check the actual implementation in that sample here: https://github.com/HackSoftware/prerenderio-heroku-example

We decided to go with express server and use the prerener.io express middleware:

const express = require('express');

const secure = require('express-force-https');

const prerender = require('prerender-node');

// Load from env vars

const port = process.env.PORT;

const indexHtml = process.env.INDEX_HTML;

const prerenderToken = process.env.PRERENDER_TOKEN;

const app = express();

// Use secure middleware to redirect to https

app.use(secure);

// Use prerender io middleware

app.use(prerender.set('prerenderToken', prerenderToken));

// Serve index.html on every url.

app.get('*', (req, res) => {

res.send(indexHtml);

});

app.listen(port);The reasons for serving the index.html from an environment variable are:

- We want to keep that code separate from the UI project. (In different repository) So we need to load the

index.htmlfrom somewhere. - We need a low latency response so we had to keep

index.htmlin memory. - The only file from the UI project that the proxy needs to be serving is the

index.html. Every other static file loads from the CDN.

The index.html file changes only when we release the new version of the UI. So after each release, we just push the new index.html to Heroku.

Here is the script that we run on CI for every deploy of the UI project:

// Change the public url to cdn. So all of the files get downloaded from the cdn.

export PUBLIC_URL=https://cdn.domain.com/

sudo apt-get install heroku

// Authenticate to heroku

echo "machine api.heroku.com" >> ~/.netrc

echo " login email@example.com" >> ~/.netrc

echo " password UUID GOES HERE" >> ~/.netrc

// Read the index.html that we buit and load it as env var to the heroku app.

heroku config:unset INDEX_HTML --app app-name

heroku config:set INDEX_HTML="$(cat build/index.html)" --app app-name

// Upload the release to S3

aws s3 sync build s3://DEST_BUCKET/

// Invalidate the cloudfront distribution.

aws configure set preview.cloudfront true

aws cloudfront create-invalidation --distribution-id DISTRIBUTION_ID --paths "/*"Wrap-up

Does it work?

Yes. Google indexed all of the pages that we have. Also, we started getting rich content when a URL was shared on the social platforms.

Is it expensive?

Depending on how frequently you want prerender.io to re-cache your page. In this case, the content was not really dynamic so we were fine with re-caching every 5 days. Here is the pricing page: https://prerender.io/pricing

Isn't that dangerous?

In theory yes. It is not recommended to show different content based on the user agent. This is considered as Black Hat SEO. 🎩

However, prerender.io is officially approved by Google. You can read more about it here: https://developers.google.com/search/docs/guides/dynamic-rendering

Is this a long-term solution?

We've been using it for 4 months without having any problems so far. However, we are definitely looking forward to migrating the project to server-side rendering. This should be way more sustainable and the SEO should benefit even more for the faster load time.